AI and Emerging Technologies: Pioneering a New Era

In today’s rapidly evolving digital landscape, artificial intelligence (AI) and emerging technologies are not just buzzwords—they’re the driving forces behind transformative changes across industries. As we witness a shift towards more interconnected, smart, and autonomous systems, businesses and individuals alike must adapt to harness these innovations for a better, more efficient future.

The Evolution of AI

From its inception in the mid-20th century to its modern-day applications, AI has come a long way. Early developments in machine learning and neural networks paved the way for today’s sophisticated algorithms that can predict trends, understand natural language, and even create art. The continuous evolution of AI is a testament to human ingenuity and the relentless pursuit of progress.

Key Emerging Technologies Transforming AI

Several cutting-edge technologies are converging with AI to create unprecedented opportunities. Here are a few that are making headlines:

- Internet of Things (IoT): With billions of connected devices, IoT provides vast amounts of data that AI can analyze to optimize everything from city infrastructure to home automation.

- Blockchain: Enhancing security and transparency, blockchain technology is being integrated with AI to create robust systems for data management and cybersecurity.

- Edge Computing: By processing data closer to the source, edge computing minimizes latency and enhances real-time decision-making, a crucial factor for autonomous vehicles and smart manufacturing.

- Quantum Computing: Although still in its early stages, quantum computing promises to revolutionize AI by solving complex problems much faster than classical computers ever could.

Transformative Impacts Across Industries

The fusion of AI with emerging technologies is reshaping various sectors, including:

- Healthcare: AI-powered diagnostics and personalized treatment plans are transforming patient care. Coupled with IoT devices, healthcare providers can monitor patient health in real time, leading to early interventions.

- Finance: From fraud detection to automated trading, AI is optimizing financial services. Blockchain enhances the security of transactions, ensuring trust and transparency.

- Manufacturing: Smart factories use AI-driven robots and predictive maintenance to streamline production processes, reduce downtime, and enhance product quality.

- Retail: Personalized shopping experiences powered by AI, combined with real-time inventory management through IoT, are redefining the retail landscape.

Ethical Considerations and the Road Ahead

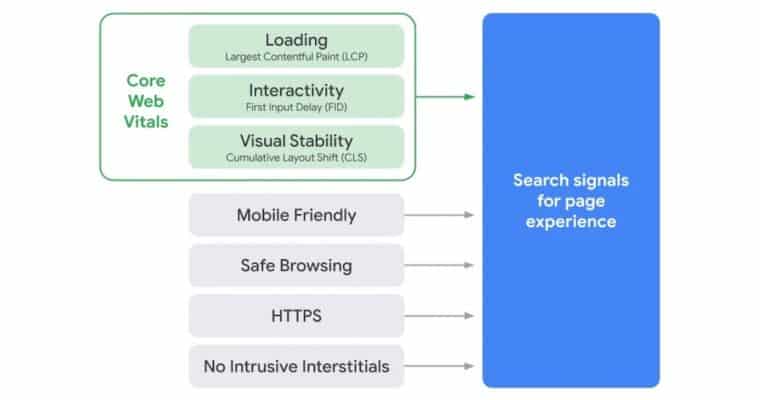

As these technologies continue to evolve, ethical considerations become increasingly important. Questions around data privacy, algorithmic bias, and job displacement need to be addressed to ensure that advancements benefit society as a whole. Transparency in AI development and responsible use of data are critical steps towards building trust and ensuring ethical innovation.

Looking ahead, the integration of AI with emerging technologies holds immense potential. Future advancements could lead to breakthroughs in climate modeling, sustainable energy management, and even space exploration. As industries adapt, collaboration between technologists, policymakers, and educators will be key in shaping a future where technology serves humanity responsibly.

Smarter, more connected, and inclusive world.

The intersection of AI and emerging technologies is more than a technological trend—it’s a paradigm shift. As we stand on the brink of a new era, the challenge lies in balancing innovation with ethical responsibility. Embracing these changes will not only drive economic growth but also pave the way for a smarter, more connected, and inclusive world.

Stay tuned for more insights and updates on how these transformative technologies are shaping our future. Whether you’re a tech enthusiast, business leader, or simply curious about the latest advancements, now is the time to dive into the exciting world of AI and emerging technologies.